Table of Contents

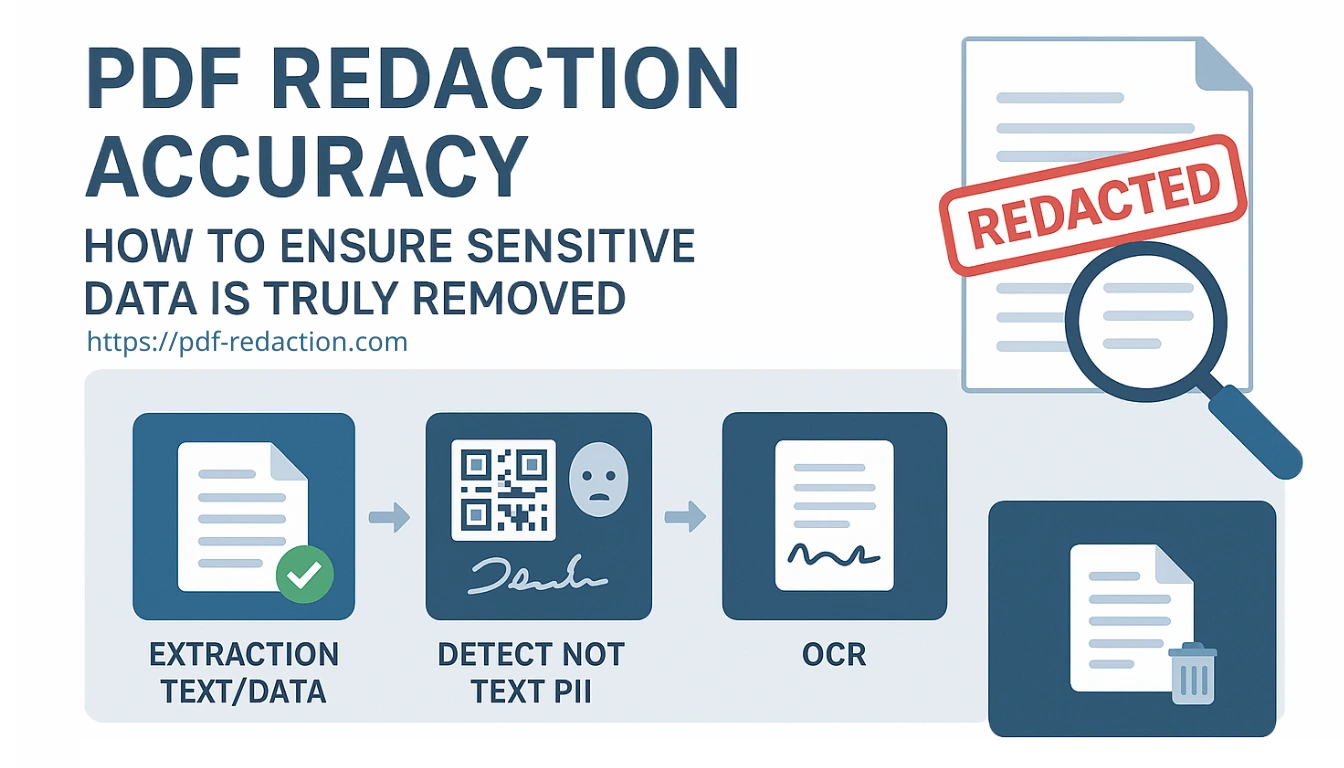

PDF Redaction Accuracy: How to Ensure Sensitive Data is Truly Removed

Co-Founder / Machine Learning & Data Expert

Ensuring Accuracy in PDF Redaction: Why Every Stage Matters

In the domain of secure document handling, redaction is more than simply "blacking out" text. A robust redaction system must reliably detect, locate, and remove or mask all sensitive elements - whether they are text, images, metadata, or non-textual artifacts - and do so without altering or corrupting the rest of the content. A redaction that misses a single piece of personally identifiable information (PII) or leaves hidden metadata is a liability.

Below, we will break down each major stage in a modern PDF redaction pipeline (such as ours at PDF-Redaction) and discuss how accuracy is achieved (and challenged) at each step.

1. Extraction of Text / Data

Straight (searchable) text, including vertical or rotated text

In native or "true" PDF documents, much of the content is represented as text objects (glyphs) with positioning, font, and transformation metadata. A good redaction engine will:

- Parse the page’s content stream and text runs

- Handle transformation matrices so that rotated (for example 90° or 270°) or sheared text is correctly read in spatial order

- Detect vertical writing modes (common in East Asian documents) and proper glyph order

Accuracy challenges:

- Complex PDFs sometimes embed text as individual characters with separate transformations, so reassembling word boundaries can be error-prone

- Nonstandard encodings or font subsets may require mapping glyph codes to Unicode, and in some cases this mapping is incomplete or ambiguous

With a well-tuned extraction layer, you should expect very high accuracy (≥99%) for normal horizontal text, and slightly lower but still strong performance on rotated or vertical text.

Text embedded in images

Certain documents embed text purely as images (for example scanned reports or graphical letterheads). That text is invisible to the PDF’s text layer and must be processed by OCR later (see stage 3).

However, in some hybrid PDFs a page may combine vector text and image overlays. A careful redaction engine needs to flag image regions containing text (or likely text) so they can be processed downstream.

Accuracy challenges:

- Low image resolution, compression artifacts, or nonuniform backgrounds can reduce readability

- Distorted or curved text (for example on logos) may defeat standard OCR

2. Detecting Non-Text PII (Beyond Plain Text)

A robust redactor must spot sensitive elements not encoded as plain text. Common categories include:

QR codes and Barcodes

These codes can embed structured data such as URLs, identifiers, or contact info. A redaction system may:

- Scan the page for 1D barcodes or 2D codes (QR, DataMatrix, Aztec, etc.)

- Decode the content and assess whether it contains sensitive data

- Map the bounding box of the code region for redaction

Accuracy considerations:

- Dense or damaged codes may fail to decode, but the system can still flag them for manual review

- Overlapping or partially obscured codes may be hard to detect precisely

Faces

Documents can contain photographs (for example ID photos, group shots). Redaction tools can run a face detection model:

- Use CNN-based face detectors (MTCNN, RetinaFace, etc.) to find bounding boxes of faces

- Flag them for redaction regardless of identity

Accuracy challenges:

- Side profiles, occlusions (glasses, masks), low resolution, or extreme lighting make detection harder

- False positives (non-face patterns) or missed small faces

Signatures

Signatures are often freeform strokes overlapping other content. To detect them:

- Use stroke or curve detectors, edge-based heuristics, or a trained segmentation model

- In structured forms, regions marked "signature" can be prioritized

Accuracy challenges:

- Stylized or faint signatures may be missed

- Scribbles or decorative marks may be falsely detected as signatures

3. OCR (for Images and Image-Based PDF Pages)

OCR is the workhorse for converting pixels into text. Accuracy here is critical because undetected characters equal redaction gaps.

Printed text OCR

- Use a state-of-the-art OCR engine

- Preprocess images (binarization, deskewing, noise removal) to maximize recognition

- Support layout analysis (columns, text flow)

Accuracy challenges:

- Low resolution or compressed images degrade recognition

- Curved baselines, mixed fonts, or overlapping graphics introduce errors

Handwritten text OCR

Many sensitive documents include handwritten notes, forms, or signatures. Detecting and redacting handwritten text requires specialized handwriting OCR models:

- CNN-RNN hybrid models or transformer-based handwriting recognition

- Training on large handwriting datasets to support multiple scripts and writing styles

Accuracy challenges:

- Handwriting varies greatly between individuals, with inconsistent shapes and spacing

- Poor scan quality, faint ink, or cursive styles reduce accuracy

- Mixed printed and handwritten text on the same page can confuse models

Expected performance: Printed text OCR can reach ≥98% accuracy on clean scans, but handwritten OCR is often lower (70-90%) depending on writing quality. For redaction, it is critical to aim for high recall, ensuring all possible sensitive handwriting is flagged, even if precision suffers.

4. Sensitive Data Detection via AI and ML

Once text is available, the system must decide which content is sensitive. Methods include:

- Named Entity Recognition (NER) models for names, addresses, account numbers, etc.

- Regular expressions for structured patterns (credit cards, IDs, bank account formats)

- Context-aware models (transformers like BERT) for ambiguous cases and LLMs for complex patterns

Accuracy tradeoffs:

- Recall vs precision: broad rules flag more false positives, narrow rules miss sensitive content

- Domain specificity: models trained on general text may underperform on legal, medical, or financial documents

- Multilingual support adds complexity

A strong system targets ≥95% recall with acceptable precision, while supporting manual review.

5. Matching Detected Data Back to Original Coordinates

Detection is only useful if we can accurately map sensitive items back to the PDF page:

- Map extracted or OCR tokens to bounding boxes in the page coordinate system

- Preserve per-word or per-character coordinates for precision

- Use bounding boxes from image analysis for faces, QR codes, or signatures

Accuracy challenges:

- Mis-split or merged glyphs can shift coordinates

- OCR bounding boxes may deviate from true strokes

- Rotated or transformed text requires consistent coordinate transforms

A robust system ensures minimal error and full coverage of the visible region.

6. Metadata and Hidden Structure Cleaning

After visible content is redacted, hidden layers must be sanitized:

- Document metadata (title, author, subject, keywords)

- Embedded XMP or XML metadata

- Annotations, form fields, embedded JavaScript, attachments

- Accessibility tags and alternate text

- Incremental updates or revision history

Accuracy challenges:

- Easy to miss obscure metadata fields or hidden object streams

- Incomplete cleanup can expose sensitive information to forensic analysis

A strong pipeline guarantees complete metadata sanitization.

Accuracy Across the Pipeline

The accuracy of the full redaction process is only as strong as its weakest link. Even perfect detection is wasted if coordinate mapping is wrong. Conversely, perfect redaction with weak detection leaves data unprotected.

Why PDF-Redaction Matters

At PDF-Redaction we combine AI-powered detection with the option for manual review to balance automation and precision. We focus on:

- Local on-device processing for privacy and security

- Fast performance (around one page per second) without sacrificing accuracy

- Support for PII, PHI, and financial data types using AI models

By carefully integrating each stage - from extraction through metadata cleanup - we aim to deliver reliable, safe, and auditable redaction results.